Get started with OpenMP

by Goutham

We will look into the most familiar parallel programming model "OpenMP" and its functionalities from a students view. We will then get our hands dirty by trying out few examples of parallel code

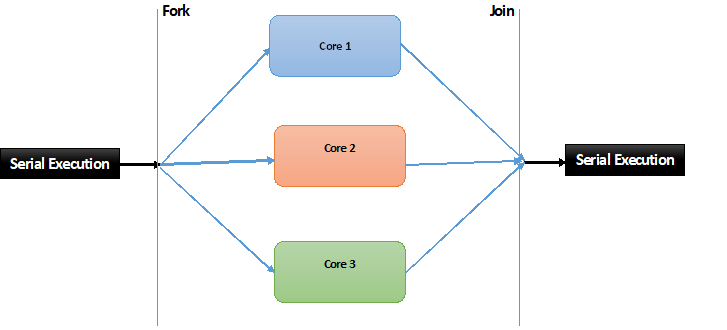

OpenMP is a shared memory programming model that can improve the preformance of the code. Well that esclated quickly, From our basic operating systems course we know that threads shares the memory. A process that spawns two or more threads share memory used by it with the threads, So that the threads can effectively communicate. By default each thread has its own stack, .txt, registers, program counters but shares the heap space. Hence the thread model is the base for Fork-Join parallelism.

In order to learn multithreaded algortihms refer to chapter-27 of CLRS (needless to say, buy CLRS). CLRS talks about acheiving parallelism using the keywords Parallel, Spawn, Sync. These contructs are followed in OpenMP pragramming too.

In this post let us consider C/C++ as the language used. (OpenMP works with Fortran too)

#include<omp.h>

declares two types of functions

- Functions those are used to create a parallel environment and control the flow.

- Functions those are used to synchronize the data access.

#pragma omp is used tell the compiler to treat the code that follows this line, for parallel execution.

Consider the following code snippet and guess the output.

#include<omp.h>

#include<iostream>

using namespace std;

int main()

{

#pragma omp parallel

{

cout<<"Start"<< endl;

for(long i =0 ; i<1000;i++) ;

cout<<"End"<< endl;

}

return 0;

}

The popular answer for the above code(on a dual core machine) would be

Start End Start End which is not true always. Consider the case in which 'i' iterates over a huge number like 10000000, the second thread would have reached the Start statement befor the loop is completed.

Hence the output is non-determinstic it is either Start Start End End OR Start End Start End .

The above given code is targeted of g++ compiler. In addition to the normal compile syntax have to add -fopemp in the compile statement.

Eg. g++ sample.cpp -fopenmp -o << binaryname >>

Let us dive further and compute sum first 1000 numbers parallely.In addition to the previous parallel directive, we need a new directive to parallelize the for loop.